Learning Concept Embeddings with a Transferable Deep Neural Reasoner

May 10 | KGC 2023

•

26m

We present a novel approach for learning embeddings of concepts from knowledge bases expressed in the ALC description logic. They reflect the semantics in such a way that it is possible to compute an embedding of a complex concept from the embeddings of its parts by using appropriate neural constructors. Embeddings for different knowledge bases are vectors in a shared vector space, shaped in such a way that approximate subsumption checking for arbitrarily complex concepts can be done by the same neural network for all the knowledge bases.

Up Next in May 10 | KGC 2023

-

Leave no Thought Behind: Encoding Con...

Many industries store vast amounts of information as natural language. Current methods for composing this text into knowledge graphs parse a small set of relations from within a larger document. The author's specific diction is approximated by the vocabulary of the model. In domains where precise...

-

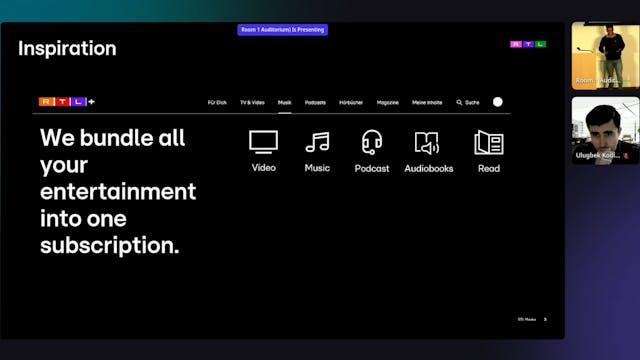

Building a Content Knowledge Graph fo...

We started our Knowledge Graph journey with a content knowledge graph that helps us unify and connect various media types for our multi-purpose streaming platform (RTL+). We include media, entities & enriched metadata from Movies, Series, Music, Podcasts and Audiobooks. But we soon realised that ...

-

AI, LLMs, and the Unknowable Knowledg...

Benn Stancil | Mode Co-founder + CTO Officer